In the last post, we went through creating our home lab Kubernetes cluster and deploying the Kubernetes dashboard. In this post we are going to create a couple more stateless applications.

What is BusyBox

Several stripped-down Unix tools in a single executable file.

Commonly referred to as the “Swiss army knife tool in Linux distributions”

I began by just testing this following a walkthrough tutorial but then later realised that this is a great tool for troubleshooting within your Kubernetes pod.

You can find out more information here on Docker Hub.

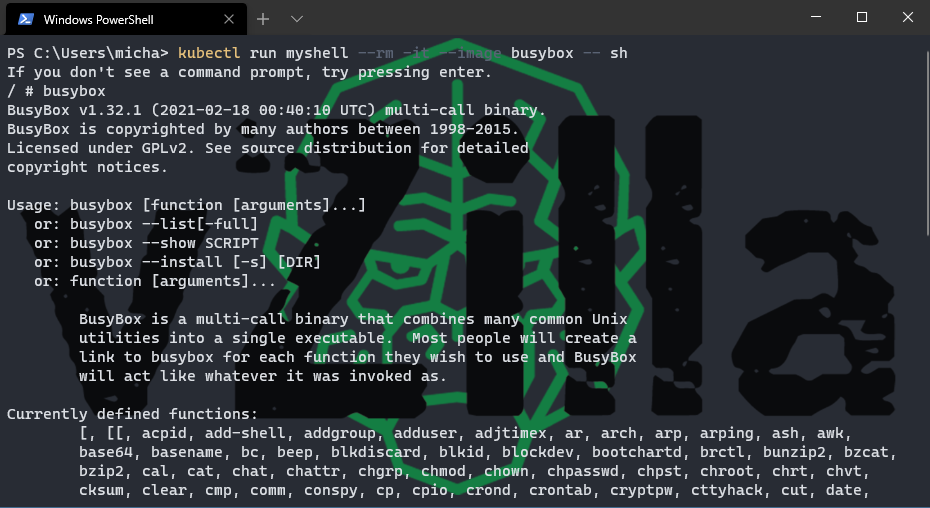

kubectl run myshell –rm -it –image busybox – sh

Some things you didn’t know about kubectl The above kubectl command is equivalent to docker run -i -t busybox sh.

When you have run the above kubectl run command for busybox this gives you a shell that can be used for connectivity and debugging your Kubernetes deployments. Kubernetes lets you run interactive pods so you can easily spin up a busybox pod and explore your deployment with it.

NGINX deployment

Next, we wanted to look at NGNIX, NGINX seems to be the defacto in all the tutorials and blogs that I have come across when it comes to getting started with Kubernetes. Firstly it is open-source software for web serving, reverse proxying, caching, load balancing, media streaming and their description here go into much more detail. I began walking through the steps below to get my NGINX deployment up and running in my home Kubernetes cluster.

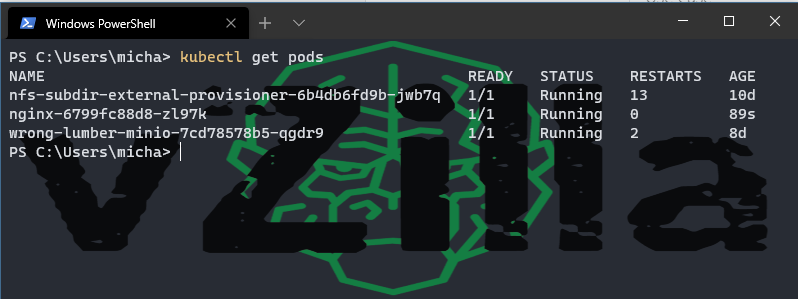

kubectl create deployment nginx –image=nginx

at this point if we were to run kubectl get pods then we would see our NGINX pod in a running state.

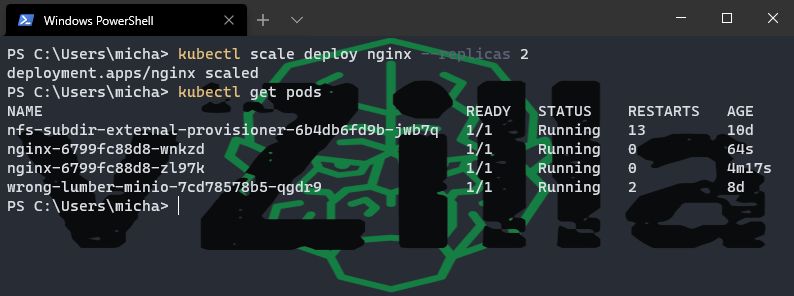

Now if we want to scale this deployment, we can do this by running the following command. For the demo I am running this in the default namespace if you were going to be actually keeping this and then working on this you would likely define a better namespace location for this workload.

kubectl scale deploy nginx –replicas 2

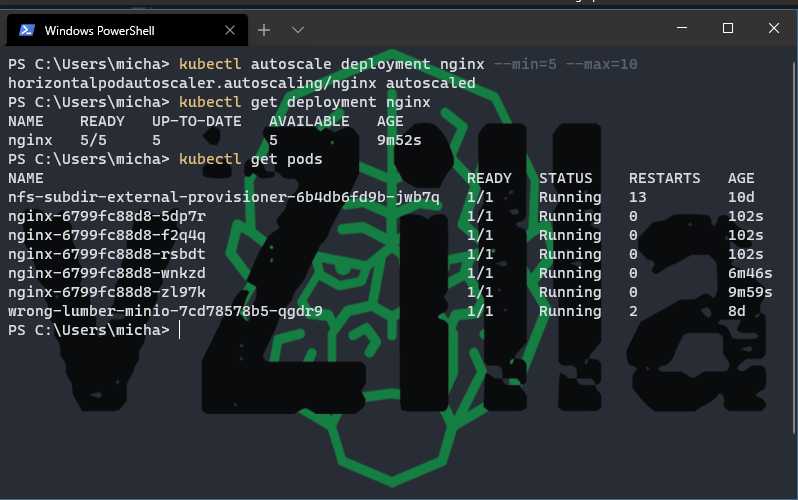

You can manually scale your pods as you can see above or you can use the kubectl autoscale command which allows you to set minimum pods and maximum pods and this should be the moment where you go, hang on this sounds like where things get really interesting and the reason why Kubernetes full stop. You can see by running the following commands and configuring autoscale we get the minimum and if we were to put a load onto these pods then this would dynamically provision more pods to handle the load. I was impressed and the lightbulb was flashing in my head.

Ok, so we have a pod that is going to help us with load balancing our web traffic but for this to be useful we need to create a service and expose this to the network. This can be done with the following command; we are going to use the NodePort, to begin with as we did with the Kubernetes dashboard in the previous post.

kubectl expose deployment nginx –type NodePort –port 80

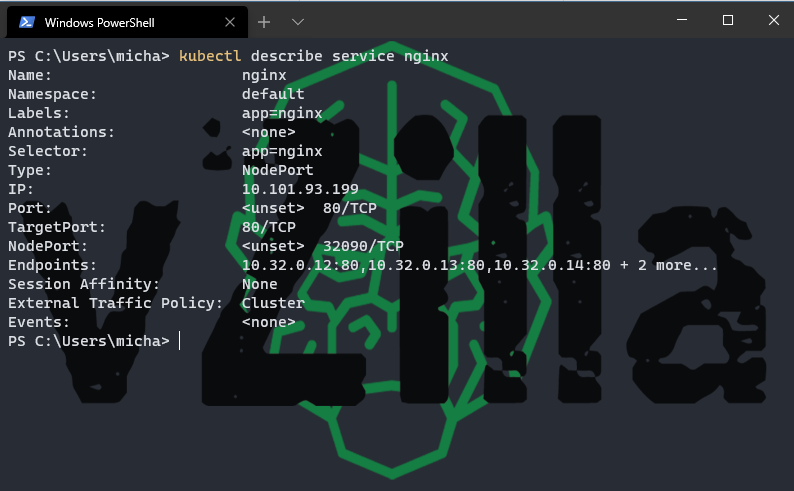

With this command you are going to understand the NodePort that you need to use to access the NGINX deployment from the worker nodes

kubectl describe svc nginx

We then run the following command to understand which node address we need to connect to

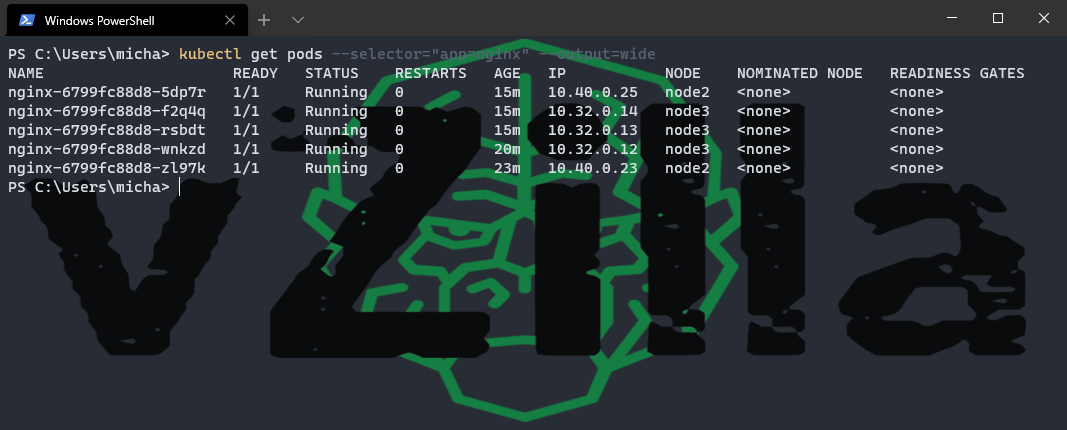

Kubectl get pods –selector=”app=nginx” –output=wide

And we can see from the below that node is either node2 or node3

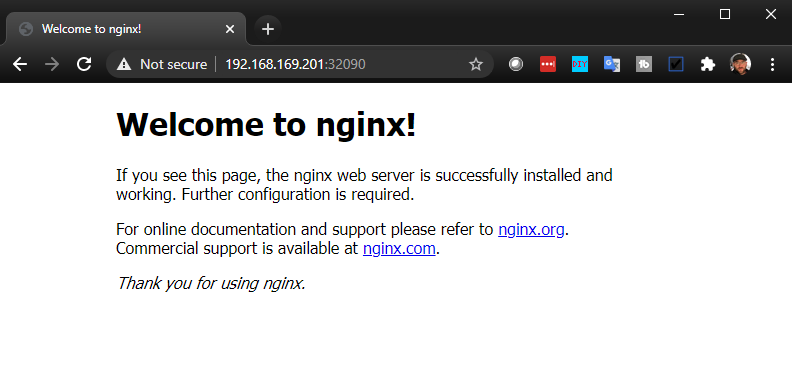

Open a web page with your worker IP address:NODEPORT Address and you should see the NGINX opening page.

Ok so all good we have our application up and running and we can start taking advantage of that I assume but what we should also cover is how you can take what you have just created and create a YAML file based on that so you can use this to create the same configuration and deployment again and again.

We created a deployment, so we capture this by running the below command to an output location you wish.

kubectl get deploy nginx -o yaml > /tmp/nginx-deployment.yml

same for the service

kubectl get svc nginx -o yaml > /tmp/nginx-service.yaml

Then you can use these yaml files to deploy and version your deployments

kubectl create -f /tmp/nginx-deployment.yaml

kubectl create -f /tmp/nginx-service.yaml

if created with YAML you can also delete with

kubectl delete -f /tmp/nginx-deployment.yaml

kubectl delete -f /tmp/nginx-service.yaml

We can also delete what we have just created by running the two following commands

kubectl delete deployment nginx

kubectl delete service nginx

I think that covered quite a bit and next we are going to get into persistent storage and some of the more stateful applications such as databases that need that persistent storage layer. As always please leave me feedback, I am learning with the rest of us so any pointers would be great.

Hi Michael, great work. That help me a lot.

Just, you forgot some dash with your command…

Keep doing a good work !