Our session this year is focusing on the automation and orchestration around Veeam and VMware. But what does that mean? The point of our session to highlight the flexibility of the Veeam Hyper-Availability Platform, some people just want the simple, easy to use wizard driven approach to install their Veeam components within their environments but some will want that little bit more and this is where APIs come in and allow us to drive a more streamlined and automated approach to delivering Veeam components.

We also highlighted this by running through everything live, I will get to the nuts and bolts of that shortly.

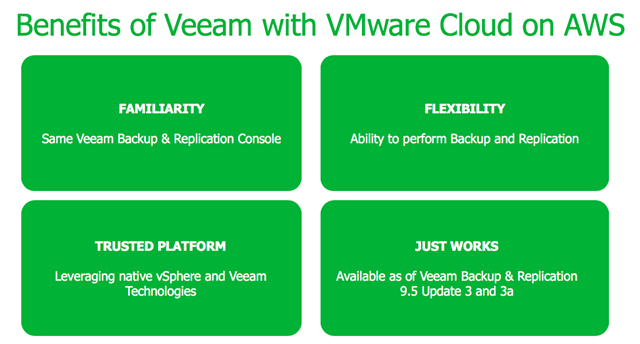

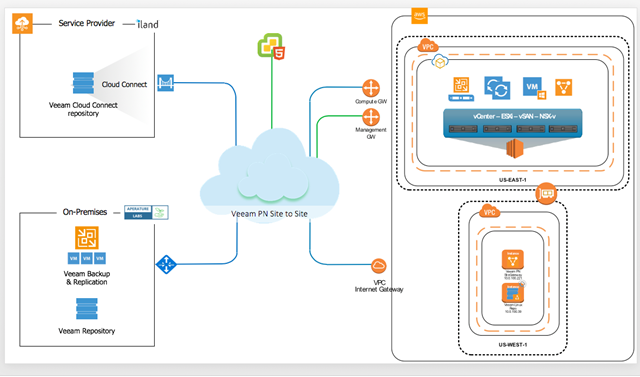

With there being a strong focus this year’s event, we wanted to highlight the capabilities by using VMware on AWS. Veeam were one of the first vendors highlighted as a supported data protection platform that could protect workloads within VMware on AWS that was 1 year ago and we wanted to highlight those features and capabilities within Veeam.

Veeam Availability Orchestrator – “Replication on Steroids”

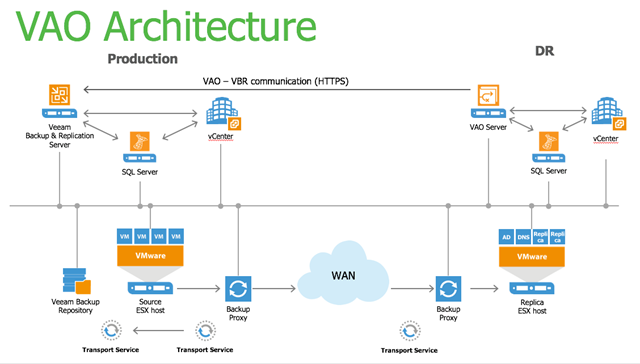

The first thing we will touch on is Veeam Availability Orchestrator released this year, this provides a “Replication on Steroids” option for your vSphere environment, this environment can be on premises or leveraging any other vSphere environment, maybe say VMware on AWS where maybe you would still like to keep your DR location on premises and send those replicas down in case of any service disruption within the AWS cloud. The replication concentrates on the application over just sending a VM from site to site, what this also enables is the ability to run automated testing against these replicas to simulate disaster recovery scenarios. Oh and the other large part of this is the automated documentation. Ever had to create your own DR Run Book? I have this does the majority for you whilst being dynamic to any changes in configuration.

Veeam DataLabs

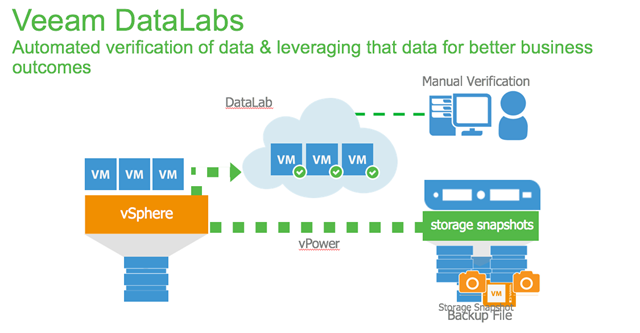

The we wanted to highlight some more automation goodness around Veeam DataLabs, what this gives alongside that Backup and Replication capability is the ability to have an automated way of testing that your backups, replicas or storage snapshots are in a good recoverable state. It also provides the ability to get more leverage from those sources to provide isolated environments for other ways of gaining insight or improving better business outcomes.

I plan to follow up on this as this is one of my passions within our technology stack the ability to leverage Veeam DataLabs from many of the products in the platform to drive different outcomes is really where I see us differentiating in the market.

The Bulk of the session

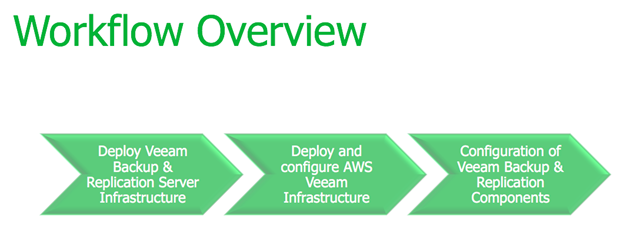

As you can see we are already cramming quite a bit into the session. But this is the main focus point of the day for us, it’s about delivering a fully configured Veeam environment from the ground up, all live whilst we are on stage. Oh and because we can we are doing this on VMware on AWS.

The driving use case for this was around the Veeam proof of concept process, I mean it was fast, deploy one windows server and 7 clicks later you have Veeam configured, perfect. But the issue wasn’t the Veeam installation, what if we could take an automated approach and be able to understand the customer pain points and needs and then in the background automate out the process of building the Veeam components and automatically start protecting a sub set of data all in the first hour of that meeting?

The beauty of this is, is you do not need to be a DevOps engineer skilled in configuration management or a developer in Ruby. The hard work has been done already and is available for free on GitHub and in the CHEF Supermarket.

I have listed the tools below that we used to get things up and running and to be honest if you were to pull this down when we make this available you will only really need PowerShell, PowerCLI and Terraform installed on your workstation.

The steps we went through live was deploying that Veeam Backup & Replication server along with multiple proxy servers to deal with the load appropriately. Because of the location of the Veeam components and our production environment we chose to also leverage the native AWS services and we deployed an EC2 instance for our backup repository but this could be any storage in any location as per our repository best practices, we also added a Veeam cloud connect service provider to show a different media type and location for your backup or replication requirements. Finally we automated the provisioning of vSphere tags and then created backup jobs based on those.

By the end of the session we had the following built out, over on the right you can see we have a Veeam Backup & Replication server and some additional proxies. On the left at the top we have our Veeam cloud connect backup as a service offering and at the bottom left we also have our on-premises vSphere environment where we could send further backup files or even as a target for those replication jobs. Underneath the VMware on AWS you can see the Amazon EC2 instance where we will store our initial backup files for fast recovery.

As I know some of you will be catching this whilst at the show I want to give a shameless plug out for the next session which goes into more detail around the Chef element of this dynamic deployment so you can find those details below.

I also want to give a huge shoutout to @VirtPirate aka Jeremy Goodrum of Exosphere who helped make the terraform and chef peice happen he also has an article over here diving into the latest version of the cookbook and some other code releases he has made that are related.

Veeam Cookbook 2.1.1 released and Sample vSphere Terraform Templates

Expect to see much more content about this in the form of a whitepaper and more blogs to consume.