We have finally made it to the penultimate post from my HomeLab series #ProjectTomorrow

I have split this one title into two parts because really this is the fundamental reason why today I even have a home lab.

I have a fully functional demo side of things so I will dive into that and what and where the Veeam components are residing, I also have a test environment which really allows for me to test another instance of Veeam software and this is really there to be spun up and spun down with no real persistence. Obviously the virtualisation piece is key to the flagship products Veeam Backup & Replication and Veeam ONE, but then also running virtual storage arrays to be able to test and demo against these around our snapshot and storage integration.

Up first is the Demo side of things. As I said this is there for the Demo type work I need to take part in on a day to day basis, for this reason this needs to be handled with care. I need this to be running with no problems whenever I need to demo the product features.

High level Veeam diagram

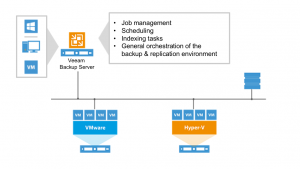

The diagram below shows the Veeam elements and also their placement within the lab and which physical ESXi host they reside on, earlier on in the series I discussed placement and the specific resources available between these hosts as well as the different constructs around clusters that are also labelled here.

Veeam Components

The Veeam backup server or as I like to explain this component as the “brain” of the solution from Veeam, this is where all the scheduling, indexing and job management is going to take place. Think of this as your central management console to control all other Veeam components.

The Veeam Proxy which can either be virtual or physical in my homelab they are all virtual machines for ease and really I am not protecting anything substantial enough to warrant dedicated hardware for performance. If the backup server is the “brain” then the Proxy is the “muscle” the proxy is what moves that data from the live production system and moves to the backup repository. This is going to be achieved through the most optimal route.

The third mandatory component we need is the storage to store our backup files, named the Backup Repository. Lets think of this as the “stomach” its primary role is to store all backup image backups and copies of data. It also keeps the metadata files for any replicated virtual machines, Technically a repository can be any storage, (performance is going to vary depending on the disk or solution you have chosen) really to summarise though this could be a Windows Share, Linux via NFS, Block/SAN device but also could be a de-duplication device.

Another component that I do have within the environment is Veeam ONE and this really gives me the ability to demo the monitoring and reporting against the virtualised environment, great for a demo but this system is also great to see what actually is being used and any bottlenecks I am seeing within this demo environment.

Storage Virtual Array Placement

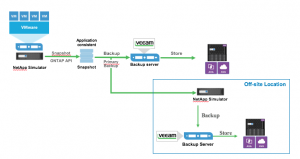

I rely heavily on being able to show the benefits of our integration with our storage vendor alliance partners so to have these virtual instances is a powerful tool to demo this functionality. For our integration with both Nimble & NetApp we are able to offer a deeper integration meaning we can orchestrate a lot of the snapshot and replication tasks via the storage so this is why you will see that I have two nodes here.

NetApp

I have two NetApp Sims available in my lab at least at any one time to be able to demonstrate this functionality.

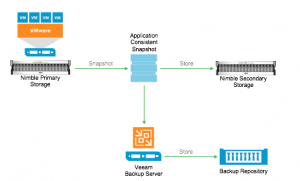

Nimble

Nimble also have a virtual appliance which allows me to demo the same functionality using their technologies.

I also have a virtual appliance for HPE StoreVirtual and EMC VNX (vVNX) this allows for me to demonstrate the backup from storage snapshots.

Backup target

I mentioned above that the Veeam backup repository could literally be any type of storage and this is true, this might be the cheapest storage solution leveraging local direct attached storage or it might be a highly effcient global deduplication device.

The following mentioned are all residing on that physical disk that I have been mentioning throughout the series. This is ultimatly fine from a performance point of view, the appliances are not powered on at the same generally although we can really make that happen if we need to push and demo these functions.

Local Storage – I have several backup repositories that use some local storage, namely VMDK disks added to the Veeam backup server they may span over several hosts but this allows me to backup any management machines on a regular basis as well as other VMs that sit on that physical layer.

NetApp AltaVault – I have touched on this appliance as a great cloud integration storage offering from NetApp in a previous post, there is in fact no integration with the AltaVault but there is a technical report from both NetApp & Veeam on how this can be used together.

AWS Storage Gateway – another appliance that doesn’t actually have any integration but there is a technical writing supporting this as a solution to send your backup files into the AWS Public Cloud.

There are other virtual appliances in fact an endless amount of them that I could roll out in the environment, but these few options give me really enough to demo to our prospects and partners.

Tomorrow I will touch on everything else Veeam related that I have within the HomeLab environment. As always please leave me some feedback I want to make sure all is good and if this was useful or not so much.