Ransomware is a threat we hear about daily it seems and it is hitting every sector, I have actually been saying that everyone should be concerned here, it is just a matter of time before you are attacked and have to face the Ransomware story. This post is all about highlighting how to prevent your cloud workloads from being easily exposed as well as talking briefly about the remediation and how to get back up on your feet.

In a previous post, I posted about Pac-Man as a mission-critical application, I have decided that this is a great way to show off the stateful approach to data within Kubernetes, this is great as you have your stateful data residing in a MongoDB database, this consists of your high scores. I have been running ad hoc demos of Kasten K10 in various clusters and platforms, but something I found in AWS was worth sharing.

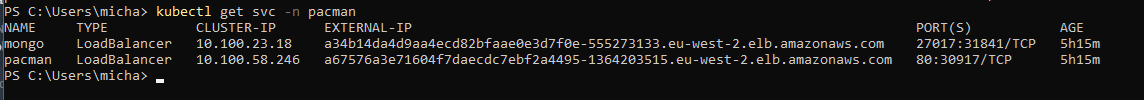

Without repeating the build-up of the Pac-man configuration mentioned in the blog just linked. We have a front end NodeJS web server (this is where we play Pac-man) and we have a MongoDB backend which is where we store the high scores. There is a service created for both pods that expose them out using the AWS load balancer.

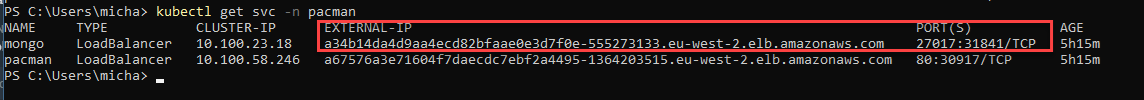

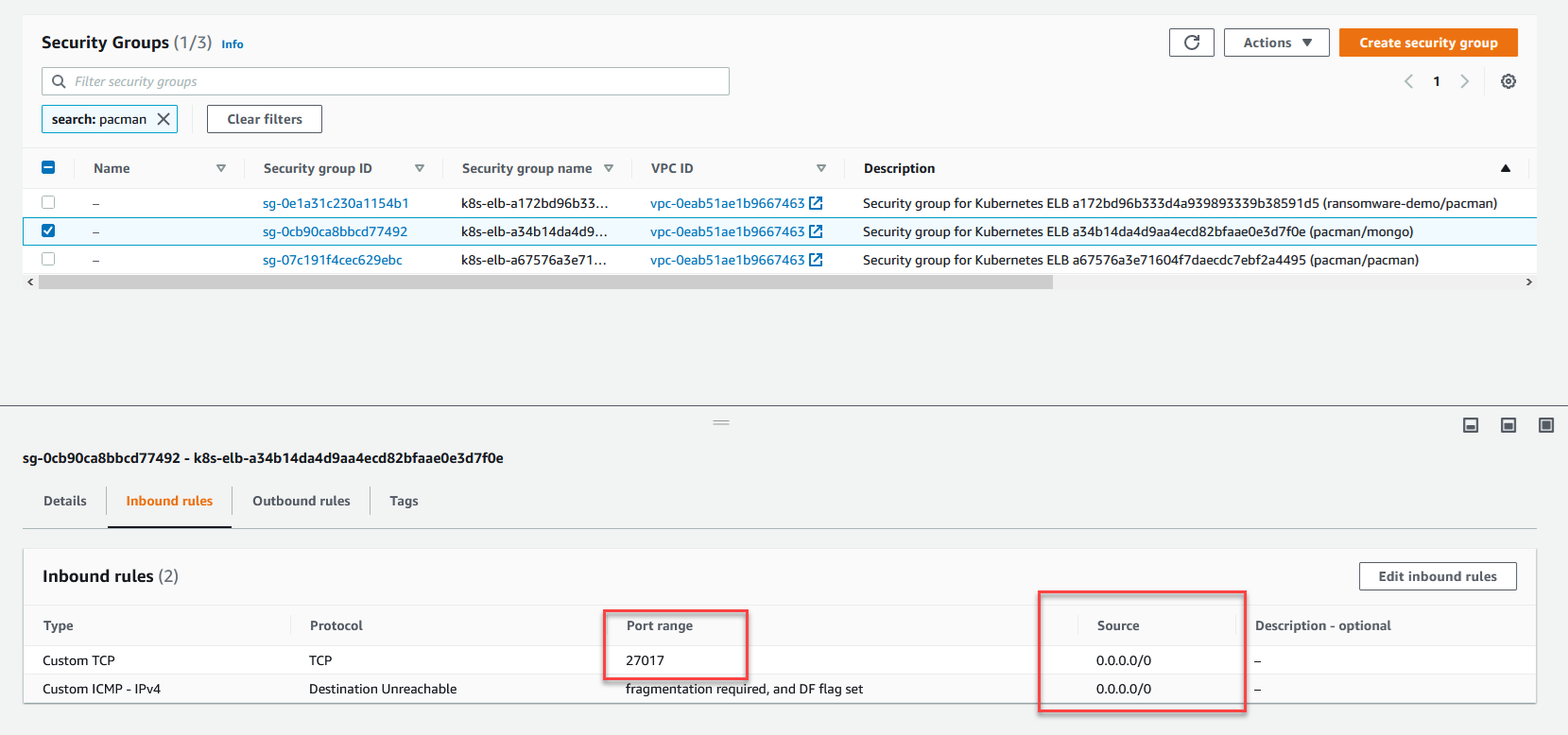

In the deployment we leverage load balancers, if we apply this to an EKS cluster, we use the ELB by default, which gives us an AWS DNS name linked to the load balancer which forwards to our pods. As you can see in the below screenshot, the associated security group created for this load balancer is wide open to the world.

Obviously, there are some gaping holes here both in the security group configuration, and there is very limited access control for the application itself. But I wanted to highlight that bad things happen, or mistakes happen. Let’s get into this.

High level – Bad Practices

Basically, by configuring things in this way our services are very exposed, whilst our application works and takes advantage of all the good things with Kubernetes and AWS and the Public cloud in general (this is not limited to AWS) Obviously by setting up the above way is not going to be best practice especially when it is a little more critical than Pac-Man and the back end high scores.

Before we talk about the considerations about making these bad practices into best practices, let me talk about the honeypot and some of the reasons why I did this.

The Ransomware Attack

I have been involved in a lot, a lot of online video demos throughout the last 12 months and the creativeness must be on point to keep people interested but also to get the point across.

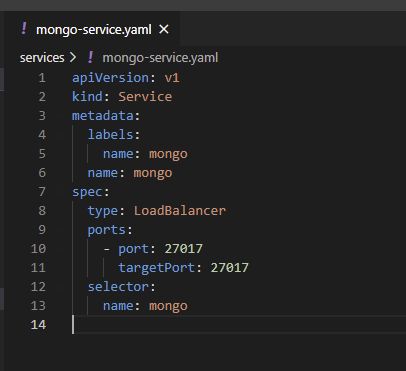

Given that the service created for mongo was as below when deployed it will take advantage of the LoadBalancer available within the Kubernetes cluster, when I wrote the original blog this was MetalLB and this was exposed over my internal home network. When you get to AWS or any of the public cloud offerings then this becomes a public-facing IP address which means you have to be more aware of this and more on this later.

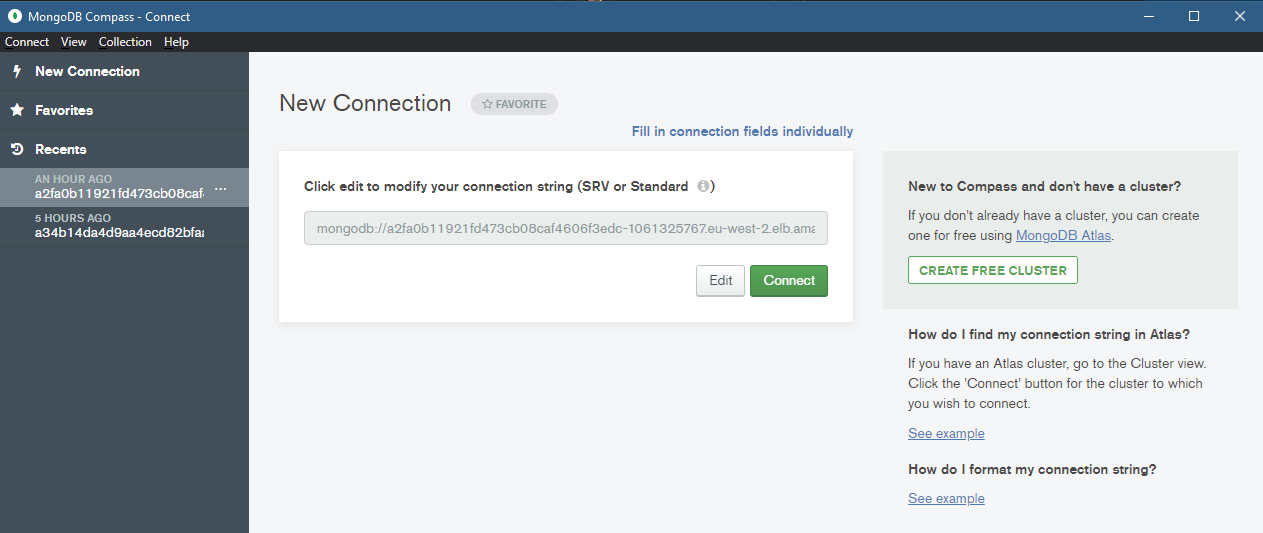

It is very easy at that point with the default settings that are configured from a security group point of view within AWS to gain access from any internet-connected device to your Mongo configuration. I will highlight this process now. First of all, you will need MongoDB Compass you can find the download for your OS here.

Once downloaded you can run this and then it is time to test out your unsecured connectivity to your Mongo instance. From here you will need that forward-facing DNS from AWS or in our case we have access to our Kubernetes cluster so we can run the following command.

kubectl get svc -namespace pacman

then within MongoDB compass, you can add the following and connect from anywhere because everything is open. Notice as well that we are using the default port, this is the attack surface, how many Mongo deployments out there are using this same approach with access not secured?

Mongodb://External-IP:27017

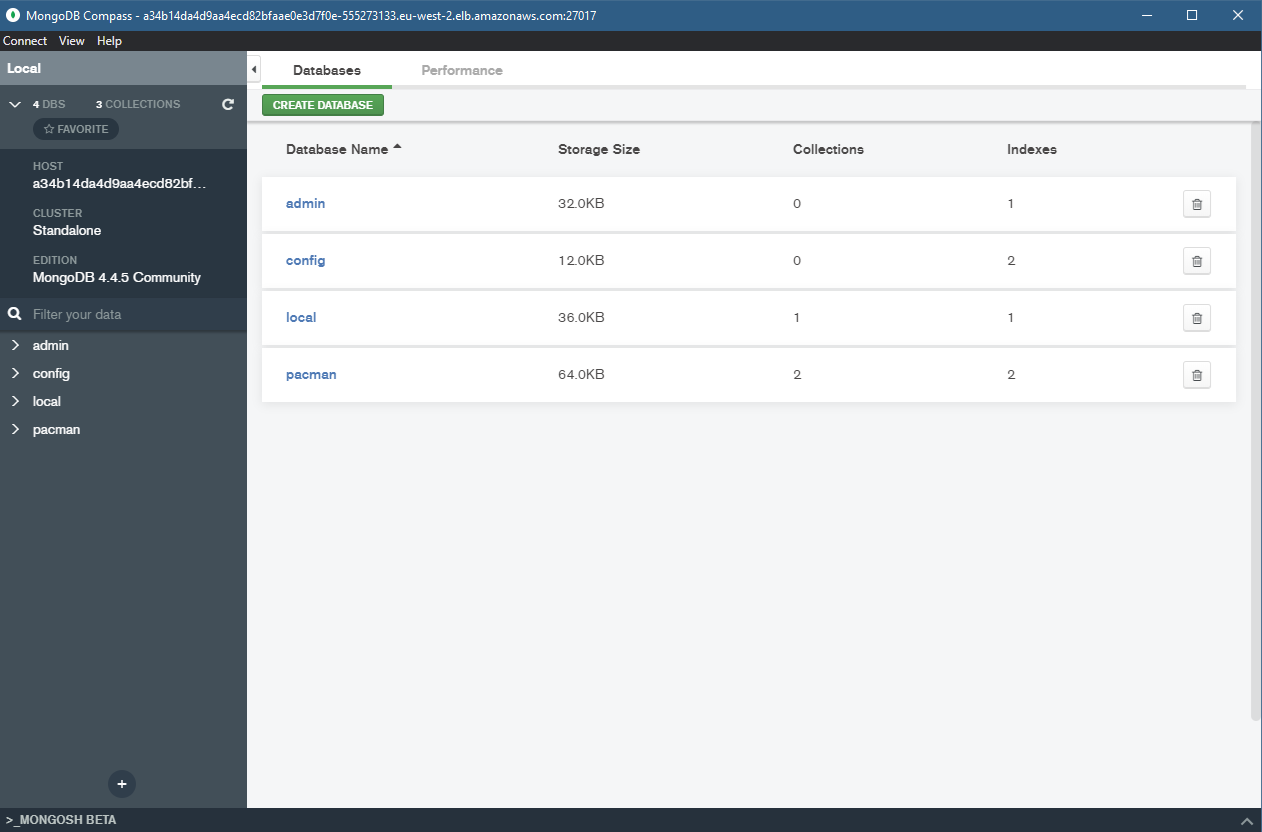

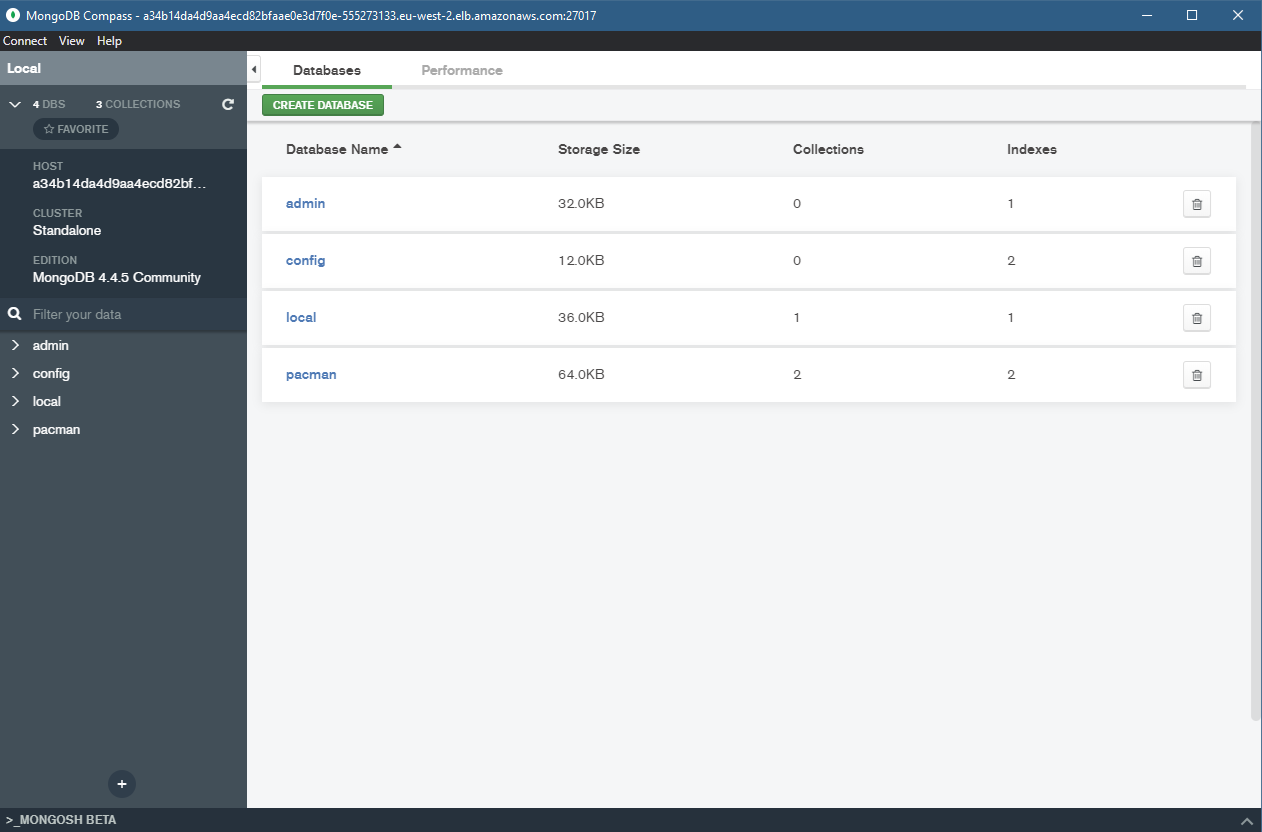

Here is a good copy of our data, you can see our Pac-man database there gathering our high scores.

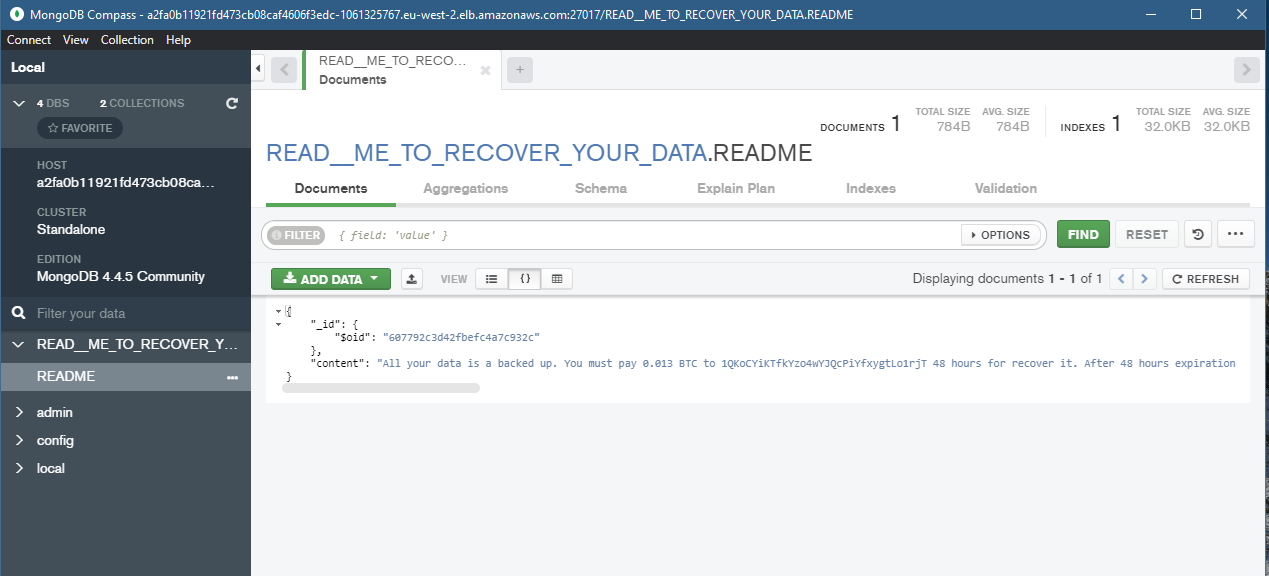

Now we can flip to what happens next, once this is exposed it was likely 12 hours max before the attack was made, sometime between 4 am and 5 am of a morning. Now remember there is no important data here and the experiment is to highlight 2 things, make sure you have thought about all-access security for your application and everything is not exposed to the world to access. But my main point and reason for the demo are making sure you have a backup! The first point is going to protect you in a prevention state the latter is going to be what you need when things go wrong. I cannot help you too much with the data that you are storing in your database but just make sure that you are regulating that data and know what that data is and why you are keeping it.

As you can see from the above we have a new database now with a readme entry that gives us the detail of the attack and also no Pac-Man database this has been removed and no longer available to our front end web server. Just like that because of an “accident” or misconfiguration, we have exposed our data and in fact, lost our data in return for ransom.

The Fix and Best Practices

I can only imagine what this feels like when this is real life and not a honeypot test for a demo! But that is why I wanted to share this. I have been mentioning throughout the requirement to check security and access on everything you do, least privilege, and then work from there. DO NOT OPEN EVERYTHING TO THE WORLD, that probably seems like simple advice but if you google MongoDB ransomware attacks you will be amazed at how many real companies get attacked and suffer from this same access issue.

The second bit after configuring your security correctly is making sure you have a solid backup, the failure scenarios that we have with our physical systems, virtualisation, cloud, and cloud-native are all the same. The attacker did not care that this was a mongo pod within a Kubernetes cluster, this could easily have been a mongo IaaS EC2 instance exposed to the public in the same way. Backups are what will help remediate the issue it will help you get back up and running.

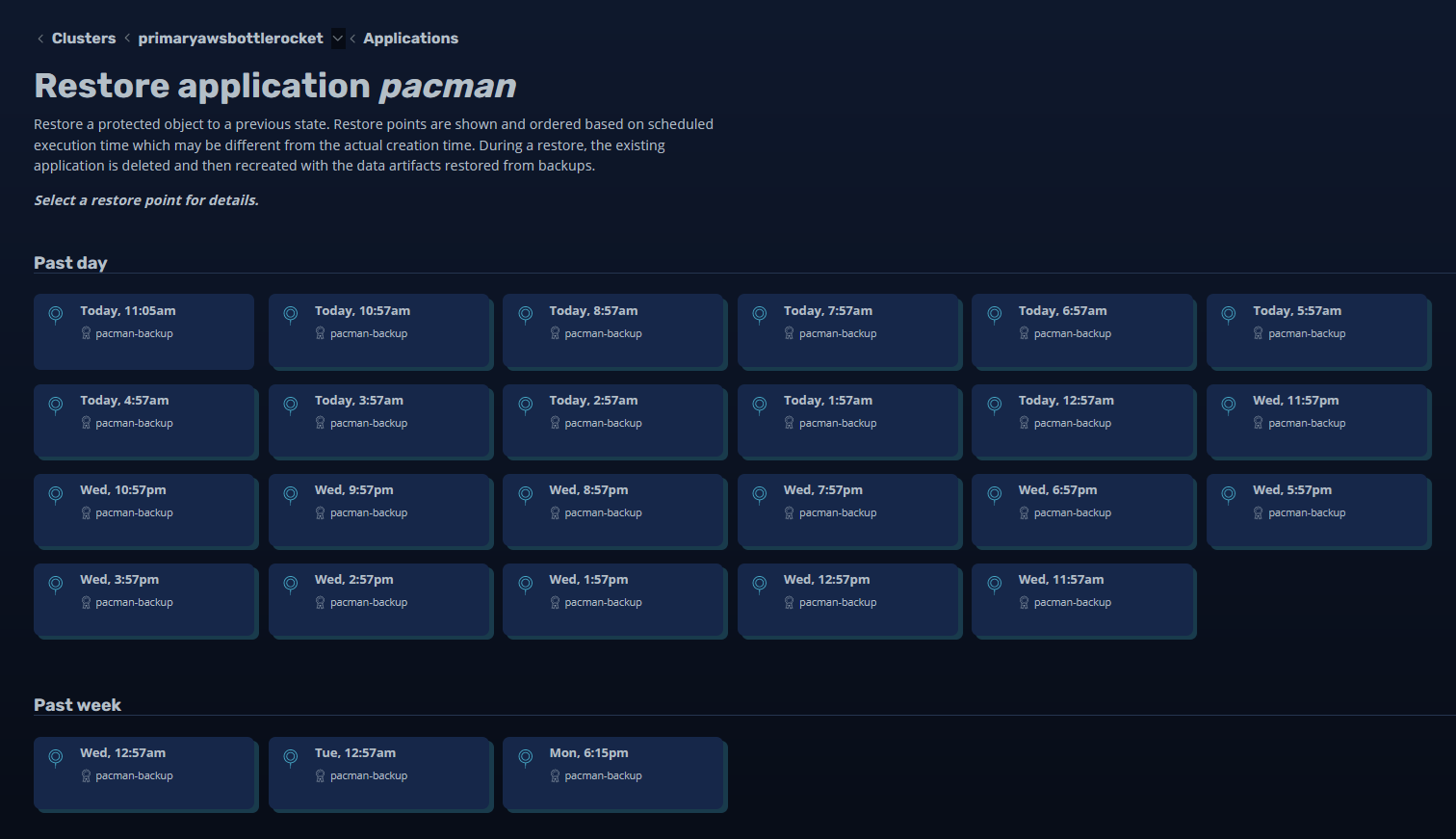

I was of course using Kasten K10 to protect my workloads, so I was able to restore and get back up and running quickly, it’s all part of the demo.

and we are back in business with that restore

Any questions let me know, no data was harmed in the making of this blog and demo. I have also deleted everything that may have been exposed in the screenshots above. I would also note that if you are walking through my lab and you are running through the examples again be conscious of where you are running, at home in your own network using MetalLB you are going to be fine as it will only expose to your home network, in AWS or any of the other public cloud offerings then that will be public-facing and available for the internet to see and access.