Quite often it’s easy to forget some of the ground-breaking things we have been doing over the years. I am sure you have all seen the reverse roadmap from Veeam stating all the great things that have been released, changed the way we move data around from a protection point of view and also interacted with our data for different use cases. One of these topics and features is around the Data transport modes available in Veeam Backup & Replication that allow us to move data from production to our backup storage as fast as possible.

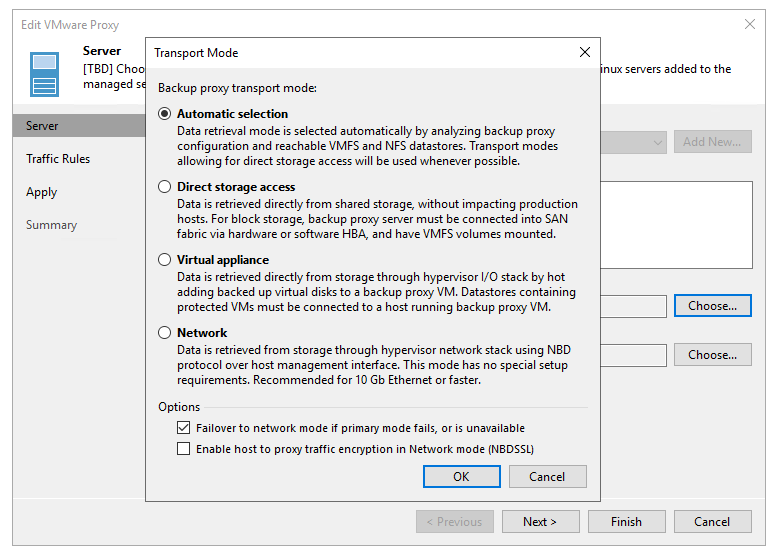

Most people I expect leave the setting when configuring their proxies to the default which is automatic.

And I expect those of you that have use this as your default proxy settings have been getting on just fine and your Veeam backups have been functioning and working with no regrets for weeks. But what if I told you there could be a better way of moving that data from A to B?

I would strongly suggest that if you have left the defaults on then if your proxy is a virtual machine then you are most likely going to be getting the Virtual Appliance or Hot-Add mode selected and if physical then most likely the Network or NBD mode is going to be the selected transport mode you are using. Easiest way to see this is in your last backup job.

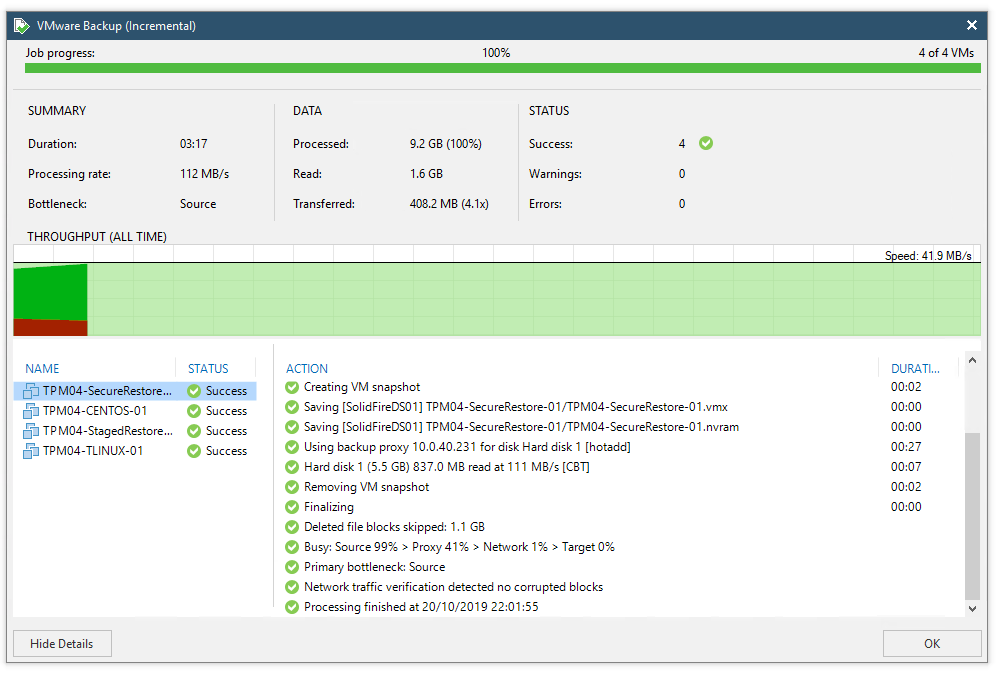

You can see above that my proxy is using the HotAdd transport mode, my proxy is a virtual machine. In this instance this is the new Linux proxy we have coming in v10. More on that in further posts.

Ok that’s great we are getting good backups and to me that’s fine and fast, however for those that have a keen eye you will see that the datastore that my machines are on is in fact a SolidFire LUN presented to my VMware environment. Which opens to other options to our transport modes. We have integration with SolidFire and the NetApp HCI offering link to this capability but we also have that second option in the opening image Direct Storage which allows for different way for us to pull data from the production storage aside from the Network and Virtual Appliance we already touched on.

Let’s see if we can improve backup times and windows.

Direct Storage Access

The option of Direct Storage access over NFS or SAN is going to be a much faster and less impactful way of taking your backups, the only transport mode that will better this mode is backup from storage snapshots which we will cover next. The downside is that there are some caveats that need to be considered, one of these would be the fact that it does take some configuration and it also requires a physical proxy to attach into a Fibre Channel SAN for access. Which is maybe why a lot of you are achieving good backups and that’s great, but you won’t have maybe gone digging for how to make this better for your environment. If everything is green why would you?

One major benefit of this transport mode is there is zero impact on hosts and production networks. Dedicated backup proxies grab required data directly from SAN and the backup traffic is isolated to SAN fabric.

This transport mode allows the production LUN or Volume to be presented to your Veeam Backup Proxy and the data is taken from there as a read only state.

Additionally, for Direct SAN Access, here are a few additional best practices:

- If you have to decide between more proxies with fewer CPU or fewer proxies with more CPU: Choose fewer proxies with more CPU as it reduces the physical footprint

- Remember to zone and present all new LUNs to the backup proxies as part of the new storage provisioning process. Otherwise, the backup job will fail over to another mode

- Use manual datastore to proxy assignments

- Forgo Direct SAN Access if you can use Backup from Storage Snapshots or Direct NFS

- Use thick disks while doing thin provisioning at the storage level to enable Direct SAN restores

- Update MPIO software (disable MPIO may increase performance)

- Update firmware and drivers across the board (proxies and hosts!)

- Find the best RAID controller cache setting for your environment

- If you are using iSCSI check out these Veeam Forum threads about increasing network performance, about the VddkPreReadBufferSize DWORD value and Netsh tweaks.

For NFS environments that aren’t supported for the Backup from Storage Snapshots Arrays, be sure to use it! It’s highly optimized and really a second generation of our NFS Client (it was really “born” from our NetApp integration in 2014).

The steps taken with Direct Storage Access can be found in the Veeam User Guide here.

Backup from Storage Snapshots

In addition to Direct Storage Access there is also the ability to leverage the Veeam storage integrations with many storage vendors. This uses a very similar flow as the Direct Storage Access shown above but rather than the production LUN or Volumes being exported or presented to your Backup Proxy it is in fact the storage snapshots that are presented meaning you are not sharing IO with the production LUN/Volume within VMware. The complication if it can be called that is that you need to perform the same configuration steps as with Direct SAN Storage to a degree although some are provisioned as part of the storage integration.

There are a huge number of storage integrations now with Veeam Backup & Replication,

These lists are only going to increase even further over the coming months and years, and its not just backup from storage snapshots it brings to your environment but a whole load more that can make life easier in your environment.

Final thing, go and see if you can better the performance of your Veeam backups, just because everything is green doesn’t mean it’s the best.

Hi there!

Thank you for your article which gave me some new insights.

But I was interested in the following statement rrgarding direct storage access:

“One major benefit of this transport mode is there is zero impact on hosts and production networks.”

Is this really true? From what I understand your virtualization platform still creates snapshot (not storage snaphot) which affects performance to some degree. Am I missing something?

Of course traffic will flow through SAN fabric and not your network which is always nice.

Thanks,

T.

Yes my statement states that it will not impact the host production networks. It will still require a VMware snapshot but it will not traverse the ESXi network it will be direct between SAN / NAS to the proxy component

Thomas, you should also take a look at the v10 capabilities linked to our orchestrated storage snapshots where we can if architected and created correctly we can now take an application consistent storage snapshot without the requirement of taking a VMware snapshot, if you think the use case here is the HIGH IO workloads that cannot withstand a VMware snapshot.