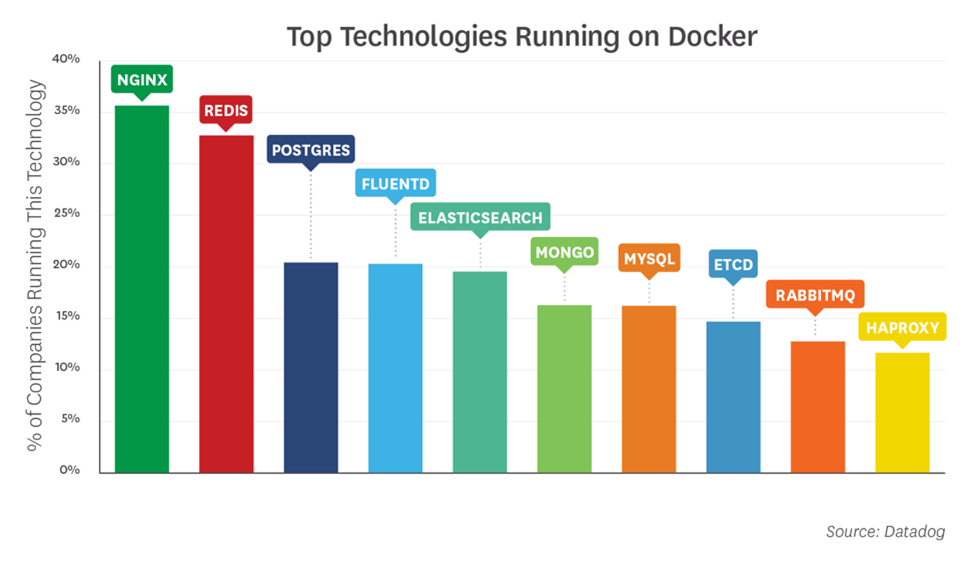

Some may say that Kubernetes is built for only stateless workloads but one thing we have seen over the last 18-24 months is an increase in those stateful workloads, think your databases, messaging queues and batch processing functions all requiring some state to be consistent and work. Some people will also believe that these states should land outside the cluster but can be consumed by the stateless workloads ran within the Kubernetes cluster.

The people have spoken

In this post, we are going to briefly talk about the storage options available in Kubernetes and then spend some time on the Container Storage Initiative / Interface which has enabled storage vendors and cloud providers the ability to fast track development into being able to offer cloud-native based storage solutions for those stateful workloads.

Before CSI

Let’s rewind a little, before CSI there was the concept of in-tree and this means that this code was part of the Kubernetes core code. This meant that new in-tree providers from various storage offerings would be delayed or would only be released when the main Kubernetes code was shipped and released. It was not just creating new in-tree provisioner plugins it was also any bug fixes they would also have to wait which means a slow down in adoption really for all those storage vendors and cloud vendors out there wanting to bring their offerings to the table.

From the side of Kubernetes code would also have potential risks if the third-party code caused reliability and security issues. Then also thinking about testing, how would the code maintainers be able to test and ensure everything was good without physical access in some cases to physical storage systems.

The CSI massively helps resolve most of these issues and we are going to get into this shortly.

Kubernetes Storage Today

Basically, we have a blend of the in-tree providers and the new CSI drivers, we are in that transition period of when everything if everything will spin over to CSI and In-Tree will be removed completely. Today you will find especially within the hyperscalers AWS, Azure and GCP that the default storage options are using the in-tree provider and you have access to alpha and beta code to test out the CSI functionality. I have more specific content upcoming around this in some later posts.

With the In-Tree as we mentioned you do not need to install any additional components whereas you do with CSI. In-Tree is the easy button but easy is not always the best option.

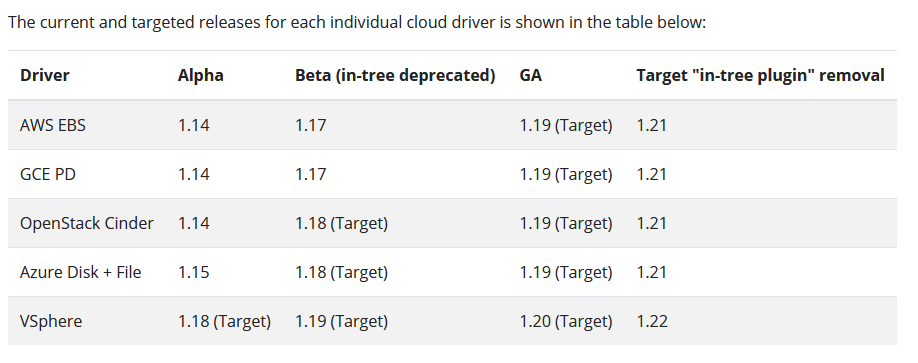

Before you can start consuming underlying infrastructure resources with the CSI the drivers must be installed in your cluster. I am not sure this will change moving forward and how this looks in the future, to be honest. The table below shows the current and targeted time frames for when we will see some of the specific CSI driver releases, some are here now for us to test and try and some are targeted for later releases.

Source – https://kubernetes.io/blog/2019/12/09/kubernetes-1-17-feature-csi-migration-beta/

What is the CSI

CSI is a way in which third party storage providers can provide storage operations for container orchestration systems (Kubernetes, Docker Swarm, Apache Mesos etc) it is an open and independent interface specification. As mentioned before this also enables those third-party providers to develop their plugins and add code without the requirement to wait for Kubernetes code releases. Overall, it is a great community effort from community members from Kubernetes, Docker and Mesosphere and this interface standardises the model for integrating storage systems.

This also means Developers and Operators only must worry about one storage configuration which stays in line with the premise of Kubernetes and other container orchestrators with the requirement around being portable.

CSI Driver Responsibility

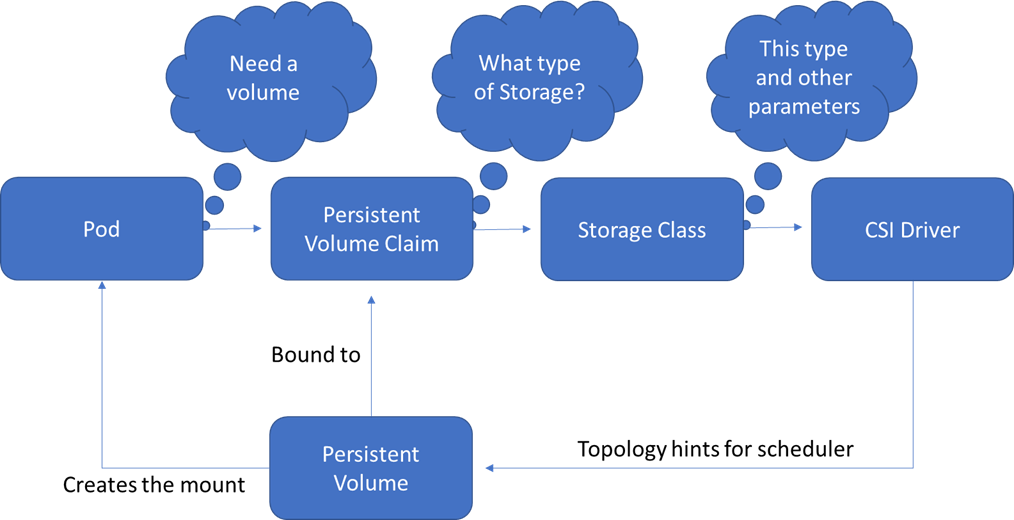

Going a little deeper into the responsibilities here and I may also come back to this in a follow-up post as I find it intriguing the process that has been standardised here. There are 4 things that need to be considered for what is happening under the hood with the CSI Driver.

CSI Driver – Must be installed on each node that would leverage the storage, I have only seen the CSI pods running within the kube-system so my assumption at this stage is that it needs to be within there and runs as a privileged pod. There are 3 services worth mentioning

Identity Service – This must be on any node that will use the CSI Driver, it informs the node about the instance and driver capabilities such as snapshots or is storage topology-aware pod scheduling supported?

Controller Service – Makes the decisions but does not need to run on a worker node.

Node Service – like the identity service it must run on every node that will use the driver.

Example workflow

This was more of a theory post for me to get my head around storage in Kubernetes, this was something of interest because of the new open-source project that was just released called Kubestr, this handy little tool gives you the ability to identity storage, both in tree provisioners and CSI. It enables you to validate that your CSI driver is configured correctly and then lastly lets you run a Flexible IO (FIO) test against your storage both in tree and CSI this can give you a nice way to automate the benchmarking of your storage systems. In the next posts, we are going to walk through getting the CSI driver configured in the public cloud likely starting with AWS and Microsoft Azure both have pre-release versions available today.

Any feedback or if I have missed something, drop it in the comments down below.

Great thoughts here. NetApp Trident was basically invented to compensate for the lack of CSI in early k8s. Since, it has converted to fully using CSI natively and continues to be the stalwart for persistent storage in stateful apps.